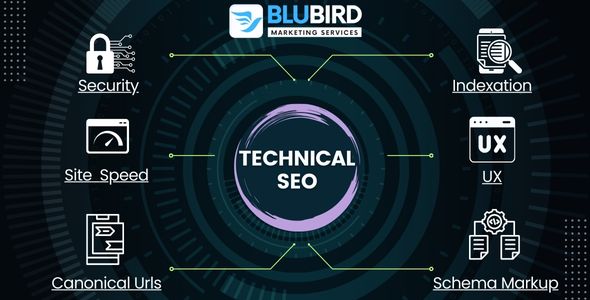

Technical SEO refers to the process of optimizing a website for search engine crawling and indexing. It involves making changes to a website’s structure, code, and content to improve its visibility and ranking on search engine results pages (SERPs). Technical SEO is an essential part of any SEO strategy, as it ensures that search engines can easily access and understand a website’s content.

There are various best practices to follow when it comes to technical SEO. These practices include optimizing website speed and performance, ensuring proper website structure and navigation, implementing schema markup, using canonical tags to avoid duplicate content, and optimizing images and videos for search engines.

To implement technical SEO best practices, there are various tools available to website owners and SEO professionals. These tools can help with tasks such as website auditing, keyword research, backlink analysis, and tracking website rankings. By using these tools, website owners can identify technical issues on their websites and take steps to fix them.

So, we don’t take more time and quickly dive into this topic, to explore with some basic terminology!!

What is Technical SEO?

Technical SEO refers to the process of optimizing a website’s technical aspects to improve its search engine rankings. Technical SEO services focus on improving website speed, crawlability, indexing, and overall site architecture. It involves optimizing various technical elements of a website, such as the code, server configuration, and website structure, to ensure that search engines can crawl, index, and rank the website effectively.

Technical SEO is an essential aspect of search engine optimization, as it helps search engines understand the website structure and content better. It also helps to improve the user experience by making the website faster and more accessible to users.

Some of the key technical SEO elements that need to be optimized include:

By following these best practices, website owners can improve their website’s search engine visibility and provide a better user experience for their visitors.

Website speed:

A fast website is essential for both search engine rankings and user experience. Website speed can be improved by optimizing images, reducing server response time, and minimizing the use of third-party scripts.

Site structure:

A well-structured website makes it easier for search engines to crawl and index the website’s content. Site structure can be improved by using clear navigation, organizing content into categories, and creating a sitemap.

- Use clear and descriptive URLs that reflect the content of each page

- Use a logical and intuitive navigation system that helps users find what they are looking for

- Use internal linking to connect related pages and improve navigation

Mobile-friendliness:

The majority of internet users accessing websites on mobile devices. It is essential to ensure that a website is mobile-friendly. This can be achieved by using responsive design, optimizing images for mobile devices, and ensuring that the website is easy to navigate on small screens.

- Use a responsive design that adjusts to different screen sizes

- Optimize images and other media for mobile devices

- Use large, easy-to-read fonts and buttons for mobile users

Crawlability

Crawlability refers to how easily search engine bots can crawl and index a website’s pages. A website that is not crawlable will not be indexed by search engines, which means it will not appear in search results. To ensure that a website is crawlable, website owners should:

- Use a robots.txt file to instruct search engine bots which pages to crawl and which to ignore

- Submit a sitemap to search engines to help them understand the website’s structure and hierarchy

- Fix broken links and 404 errors to ensure that search engine bots can navigate the website without issues

Security:

A secure website is essential for both user trust and search engine rankings. Websites should use HTTPS encryption, have a valid SSL certificate, and be protected against malware and hacking attempts.

- Use HTTPS encryption to protect user data and improve search engine ranking

- Regularly update and patch website software to prevent security vulnerabilities

- Use strong passwords and two-factor authentication to protect website accounts from unauthorized access

- By focusing on these key aspects of technical SEO, website owners and marketers can improve their search engine ranking and provide a better user experience for their visitors

Improving Site Visibility

One of the primary benefits of technical SEO is that it helps improve a website’s visibility in search engine results pages. By optimizing the technical elements of a website, search engines can better understand the content on the site and rank it accordingly. This can lead to higher rankings, more traffic, and ultimately more revenue for the website owner.

Off-Page SEO vs On-Page SEO vs Technical SEO

| Off-Page SEO | On-Page SEO | Technical SEO | |

| Definition | Activities performed outside the website to improve rankings and visibility in search engine results. | Optimization techniques implemented directly on the website to improve search engine rankings. | Optimizing the technical aspects of a website to improve search engine visibility and user experience. |

| Objectives | Increase website authority, build quality backlinks, improve online reputation, and increase organic search rankings. | Enhance website content, improve keyword targeting, optimize meta tags and titles, and improve user experience. | Optimize website speed and performance, fix indexing and crawling issues, improve website architecture, and ensure mobile-friendliness. |

| Strategies | Link building, guest blogging, social bookmarking, social media marketing, influencer outreach, content marketing, and online reputation management. | Keyword research, content optimization, meta tags optimization, HTML tag optimization, URL optimization, internal linking, and user experience improvement. | Website crawlability, XML sitemap creation, robots.txt optimization, website speed optimization, SSL certificate implementation, mobile optimization, and structured data implementation. |

| Key Benefits | Higher search engine rankings, increased organic traffic, improved online visibility, increased brand awareness, and enhanced credibility. | Improved organic search rankings, increased website traffic, reduced bounce rates, improved user engagement and experience, and higher conversion rates. | Improved website performance, higher search engine rankings, enhanced user experience, increased website traffic, better indexing and crawling, and improved website security. |

| Examples | Acquiring high-quality backlinks from reputable websites, sharing content on social media platforms, building an online reputation through positive reviews, guest posting on relevant blogs, etc. | Keyword optimization in website content, creating relevant and informative meta tags, improving website loading speed, optimizing website navigation, etc. | Implementing structured data markup for rich snippets, conducting website audits for technical issues, optimally configuring robots.txt and XML sitemaps, improving website accessibility, etc. |

Best Practices for Technical SEO

The following are the best practices which can help in technical SEO:

Use of Sitemaps

Sitemaps are an essential part of technical SEO. They help search engines understand the structure of a website and its content. A sitemap is a file that lists all the pages on a website, along with additional information about each page, such as the last time it was updated.

To ensure that search engines can crawl and index all the pages on a website, it’s important to create and submit a sitemap to search engines. In addition, it’s important to keep the sitemap up-to-date by adding new pages and removing old ones.

Optimizing Robots.txt

The robots.txt file is used to instruct search engine crawlers which pages or sections of a website should not be crawled or indexed. Optimizing the robots.txt file can help improve the crawl efficiency of a website and prevent search engines from indexing pages that should not be indexed.

When optimizing the robots.txt file, it’s important to make sure that important pages are not blocked from search engines. In addition, it’s important to avoid blocking JavaScript and CSS files, as these files are important for rendering the website correctly.

Fixing Broken Links

Broken links can have a negative impact on a website’s search engine rankings. They can also result in a poor user experience for visitors to the website. It’s important to regularly check for broken links and fix them as soon as possible.

There are several tools available that can help identify broken links on a website. Once broken links have been identified, they should be fixed by either updating the link or removing it altogether.

HTTPS Implementation

HTTPS is a secure version of HTTP that encrypts data between a website and its visitors. Implementing this can help improve a website’s search engine rankings and provide a more secure browsing experience for visitors.

To implement HTTPS, a website needs to obtain an SSL certificate and configure the website to use HTTPS. Once it has been implemented, it’s important to update all internal links to use HTTPS and redirect any HTTP links to their HTTPS counterparts.

Essential Technical SEO Tools

Technical SEO requires the use of several tools to analyze website data and identify issues that need to be addressed. The following tools are essential for any technical SEO audit:

Google Search Console

Google Search Console is a free tool that provides valuable data on a website’s performance in Google search results. It allows webmasters to monitor their website’s health, including crawl errors, indexing issues, and security concerns. It also provides insights into the search queries that drive traffic to the site and the pages that receive the most clicks.

Screaming Frog

Screaming Frog is a website crawler that allows users to analyze a website’s structure and identify technical issues. It can quickly identify broken links, duplicate content, missing meta descriptions, and other on-page SEO issues. It also provides data on page titles, headings, and images, making it a valuable tool for optimizing website content.

SEMRush

SEMRush is an all-in-one SEO tool that provides data on keyword rankings, backlinks, and website traffic. It also includes a site audit feature that identifies technical issues, such as broken links and missing meta descriptions. SEMRush is particularly useful for competitive analysis, allowing users to compare their website’s performance to that of their competitors.

Ahrefs

Ahrefs is a comprehensive SEO tool that provides data on backlinks, keyword rankings, and website traffic. It also includes a site audit feature that identifies technical issues, such as broken links and missing meta descriptions. Ahrefs is particularly useful for link building, allowing users to identify high-quality backlink opportunities and track their progress over time.

Conclusion

In conclusion, technical SEO is a crucial aspect of website optimization that focuses on improving the technical elements of a website to enhance its visibility and ranking on search engines. By implementing best practices such as optimizing site speed, fixing broken links, and ensuring mobile-friendliness, website owners can improve their site’s user experience and search engine performance.

To achieve technical SEO success, website owners can leverage a wide range of tools and resources, including Google Search Console, SEMrush, Ahrefs, and Moz. These tools can help identify technical issues, track website performance, and provide insights into how to improve site visibility and ranking.